Find me on Google Scholar.

Inclusive Avatar Guidelines for People with Disabilities: Supporting Disability Representation in Social Virtual Reality

CHI 2025

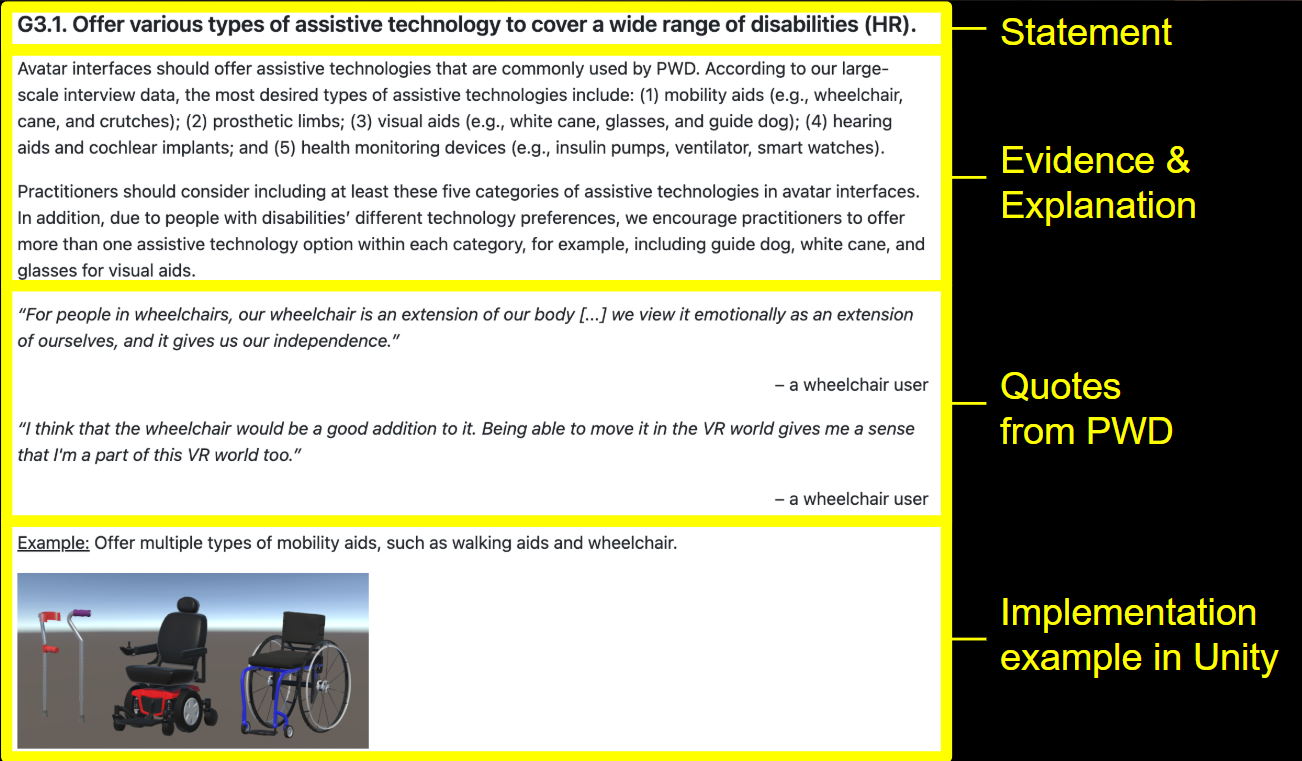

Avatar is a critical medium for identity representation in social virtual reality (VR). However, options for disability expression are highly limited on current avatar interfaces. Improperly designed disability features may even perpetuate misconceptions about people with disabilities (PWD). As more PWD use social VR, there is an emerging need for comprehensive design standards that guide developers and designers to create inclusive avatars. Our work aim to advance the avatar design practices by delivering a set of centralized, comprehensive, and validated design guidelines that are easy to adopt, disseminate, and update. Through a systematic literature review and interview with 60 participants with various disabilities, we derived 20 initial design guidelines that cover diverse disability expression methods through five aspects, including avatar appearance, body dynamics, assistive technology design, peripherals around avatars, and customization control. We further evaluated the guidelines via a heuristic evaluation study with 10 VR practitioners, validating the guideline coverage, applicability, and actionability. Our evaluation resulted in a final set of 17 design guidelines with recommendation levels.

Exploring the Design Space of Optical See-through AR Head-Mounted Displays to Support First Responders in the Field

CHI 2024

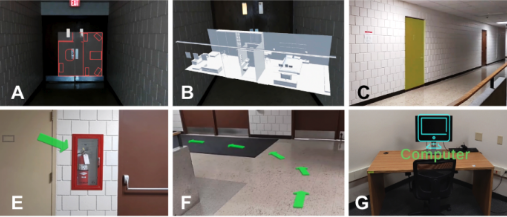

First responders (FRs) navigate hazardous, unfamiliar environments in the field (e.g., mass-casualty incidents), making life-changing decisions in a split second. AR head-mounted displays (HMDs) have shown promise in supporting them due to its capability of recognizing and augmenting the challenging environments in a hands-free manner. However, the design space have not been thoroughly explored by involving various FRs who serve different roles (e.g., firefighters, law enforcement) but collaborate closely in the field. We interviewed 26 first responders in the field who experienced a state-of-the-art optical-see-through AR HMD, as well as its interaction techniques and four types of AR cues (i.e., overview cues, directional cues, highlighting cues, and labeling cues), soliciting their first-hand experiences, design ideas, and concerns. Our study revealed both generic and role-specific preferences and needs for AR hardware, interactions, and feedback, as well as identifying desired AR designs tailored to urgent, risky scenarios (e.g., affordance augmentation to facilitate fast and safe action). While acknowledging the value of AR HMDs, concerns were also raised around trust, privacy, and proper integration with other equipment. Finally, we derived comprehensive and actionable design guidelines to inform future AR systems for in-field FRs.

“I Try to Represent Myself as I Am”: Self-Presentation Preferences of People with Invisible Disabilities through Embodied Social VR Avatars

ASSETS 2024

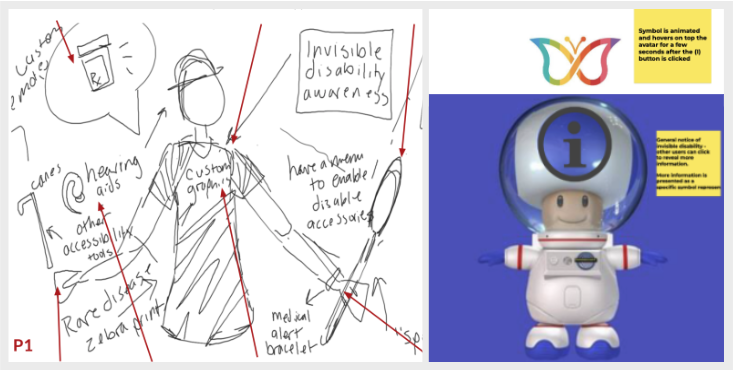

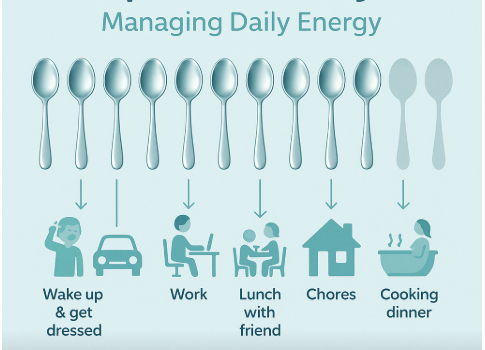

With the increasing adoption of social virtual reality (VR), it is critical to design inclusive avatars. While researchers have investigated how and why blind and d/Deaf people wish to disclose their disabilities in VR, little is known about the preferences of many others with invisible disabilities (e.g., ADHD, dyslexia, chronic conditions). We filled this gap by interviewing 15 participants, each with one to three invisible disabilities, who represented 22 different invisible disabilities in total. We found that invisibly disabled people approached avatar-based disclosure through contextualized considerations informed by their prior experiences. For example, some wished to use VR’s embodied affordances, such as facial expressions and body language, to dynamically represent their energy level or willingness to engage with others, while others preferred not to disclose their disability identity in any context. We define a binary framework for embodied invisible disability expression (public and private) and discuss three disclosure patterns (Activists, Non-Disclosers, and Situational Disclosers) to inform the design of future inclusive VR experiences.

A diary study in social virtual reality: Impact of avatars with disability signifiers on the social experiences of people with disabilities

ASSETS 2023

People with disabilities (PWD) have shown a growing presence in the emerging social virtual reality (VR). To support disability representation, some social VR platforms start to involve disability features in avatar design. However, it is unclear how disability disclosure via avatars (and the way to present it) would affect PWD’s social experiences and interaction dynamics with others. To fill this gap, we conducted a diary study with 10 PWD who freely explored VRChat—a popular commercial social VR platform—for two weeks, comparing their experiences between using regular avatars and avatars with disability signifiers (i.e., avatar features that indicate the user’s disability in real life). We found that PWD preferred using avatars with disability signifiers and wanted to further enhance their aesthetics and interactivity. However, such avatars also caused embodied, explicit harassment targeting PWD. We revealed the unique factors that led to such harassment and derived design implications and protection mechanisms to inspire more safe and inclusive social VR.

“Invisible Illness Is No Longer Invisible”: Making Social VR Avatars More Inclusive for Invisible Disability Representation.

ASSETS 2023 Poster

As social virtual reality (VR) experiences become more popular, it is critical to design accessible and inclusive embodied avatars. At present, there are few, if any, customization features for invisible disabilities (e.g., chronic health conditions, mental health conditions, neurodivergence) in social VR platforms. To our knowledge, researchers have yet to explore how people with invisible disabilities want to self-represent and disclose disabilities through social VR avatars. We fill this gap in current accessibility research by centering the experiences and preferences of people with invisible disabilities. We conducted semi-structured interviews with nine participants and found that people with invisible disabilities used a unique, indirect approach to inform dynamic disclosure practices. Participants were interested in toggling representation on/off across contexts and shared ideas for representation through avatar design. In addition, they proposed ways to make the customization process more accessible (e.g., making it easier to import custom designs). We see our work as a vital contribution to the growing literature that calls for more inclusive social VR.

“It’s Just Part of Me:” Understanding Avatar Diversity and Self-presentation of People with Disabilities in Social Virtual Reality

ASSETS 2022

In social Virtual Reality (VR), users are embodied in avatars and interact with other users in a face-to-face manner using avatars as the medium. With the advent of social VR, people with disabilities (PWD) have shown an increasing presence on this new social media. With their unique disability identity, it is not clear how PWD perceive their avatars and whether and how they prefer to disclose their disability when presenting themselves in social VR. We fill this gap by exploring PWD’s avatar perception and disability disclosure preferences in social VR. Our study involved two steps. We first conducted a systematic review of fifteen popular social VR applications to evaluate their avatar diversity and accessibility support. We then conducted an in-depth interview study with 19 participants who had different disabilities to understand their avatar experiences. Our research revealed a number of disability disclosure preferences and strategies adopted by PWD (e.g., reflect selective disabilities, present a capable self). We also identified several challenges faced by PWD during their avatar customization process. We discuss the design implications to promote avatar accessibility and diversity for future social VR platforms.